The long title for this post is: “Optimizing 19th Century Typewriters using 20th Century Code in the 21st Century”.

Patrick Honner recently shared Hardmath123’s wonderful article “Tuning a Typewriter“. In it, Hardmath123 explores finding the best way to order the letters A-Z on an old and peculiar typewriter. Rather than having a key for each letter as in a modern keyboard, the letters are laid out on a horizontal strip. You shift the strip left or right to find the letter you want, then press a key to enter it:

What’s the best way to arrange the letters on the strip? You probably want to do it in such a way that you have to shift left and right as little as possible. If consecutive letters in the words you’re typing are close together on the strip, you will minimize shifting and type faster.

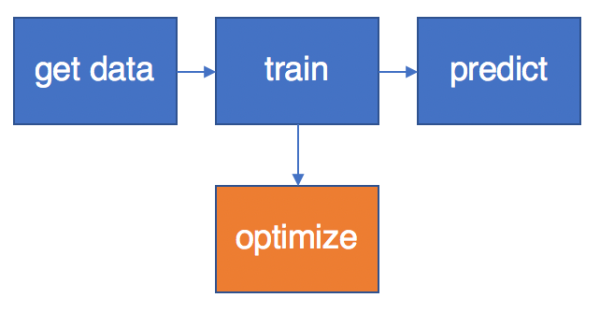

The author’s approach is to:

- Come up with an initial ordering at random,

- Compute the cost of the arrangement by counting how many shifts it takes to type out three well-known books,

- Try to find two letters that when you swap them results in a lower cost,

- Swap them and repeat until you can no longer find an improving swap.

This is a strong approach that leads to the same locally optimal arrangements, even when you start from very different initial orderings. It turns out that this is an instance of a more general optimization problem with an interesting history: quadratic assignment problems. I will explain what those are in a moment.

Each time I want to type a letter, I have to know how far to shift the letter strip. That depends on two factors:

- The letter that I want to type in next, e.g. if I am trying to type THE and I am on “T”, “H” comes next.

- The location of the next letter, relative to the current one T. For example, if H is immediately to the left of T, then the location is one shift away.

If I type in a bunch of letters, the total number of shifts can be computed by multiplying two matrices:

- A frequency matrix F. The entry in row R and column C is a count of how often letter R precedes letter C. If I encounter the word “THE” in my test set, then I will add 1 to F(“T”, “H”) and 1 to F(“H”, “E”).

- A distance matrix D. The entry in row X and column Y is the number of shifts between positions X and Y on the letter strip. For example, D(X, X+1) = 1 since position X is next to position X+1.

Since my problem is to assign letters to positions, if I permute the rows and columns of D and multiply this matrix with F, I will get the total number of shifts required. We can easily compute F and D for the typewriter problem:

- To obtain F, we can just count how often one letter follows another and record entries in the 26 x 26 matrix. Here is a heatmap for the matrix using the full Project Gutenberg files for the three test books:

- The distance matrix D is simple: if position 0 is the extreme left of the strip and 25 the extreme right, d_ij = abs(i – j).

The total number of shifts is obtained by summing f_ij * d_p(i),p(j) for all i and j, where letter i is assigned to location p(i).

Our problem boils down to finding a permutation that minimizes this matrix multiplication. Since the cost depends on the product of two matrices, this is referred to as a Quadratic Assignment Problem (QAP). In fact, problems very similar to this one are part of the standard test suite of problems for QAP researchers, called “QAPLIB“. The so-called “bur” problems have similar flow matrices but different distance matrices.

We can use any QAP solution approach we like to try to solve the typewriter problem. Which one should we use? There are two types of approaches:

- Those that lead to provably global optimal solutions,

- Heuristic techniques that often provide good results, but no guarantees on “best”.

QAP is NP-hard, so finding provably optimal solutions is challenging. One approach for finding optimal solutions, called “branch and bound”, boils down to dividing and conquering by making partial assignments, solving less challenging versions of these problems, and pruning away assignments that cannot possibly lead to better solutions. I have written about this topic before. If you like allegories, try this post. If you prefer more details, try my PhD thesis.

The typewriter problem is size 26, which counts as “big” in the world of QAP. Around 20 years ago I wrote a very capable QAP solver, so I recompiled it and ran it on this problem – but didn’t let it finish. I am pretty sure it would take at least a day of CPU time to solve, and perhaps more. It would be interesting to see if someone could find a provably optimal solution!

In the meantime, this still leave us with heuristic approaches. Here are a few possibilities:

- Local optimization (Hardmath123’s approach finds a locally optimal “2-swap”)

- Simulated annealing

- Evolutionary algorithms

I ran a heuristic written by Éric Taillard called FANT (Fast ant system). I was able to re-run his 1998 code on my laptop and within seconds I was able to obtain the same permutation as Hardmath123. By the way, the zero-based permutation is [9, 21, 5, 6, 12, 19, 3, 10, 8, 24, 1, 16, 18, 7, 15, 22, 25, 14, 13, 11, 17, 2, 4, 23, 20, 0] (updated 12/7/2018 – a previous version of this post gave the wrong permutation. Thanks Paul Rubin for spotting the error!)

You can get the data for this problem, as well as a bit of Python code to experiment with, in this git repository.

It’s easy to think up variants to this problem. For example, what about mobile phones? Other languages? Adding punctuation? Gesture-based entry? With QAPs, anything is possible, even if optimality is not practical.