Parfit: A Philosopher and His Mission to Save Morality is the story of a philosopher who gambled with his life.

David Edmonds is warm and particular in his profile of Derek Parfit, a British philosopher born in China who considered questions of personal identity, choice, ethics, and morality.

I came for the philosophy but stayed for the human drama. Parfit is chronicle of a journey into the monomaniacal. A boy with eccentricities enthusiastically expressed through a range of eclectic interests becomes a man with singular focus on his philosophy. This focus draws him near to some and alienates others.

By the end of the book I was looking back wistfully at Parfit’s own youth and was left wondering whether his path was inevitable. Whether the path was worth it. The reflexive answer to the latter question is, “Of course it was worth it! Look at the ideas he gave to the world and how that influenced so many others!” I can’t be sure of that. I can’t put those things on the scale. Ironically, exploring this question only leads me back to the moral theories of Parfit’s own work.

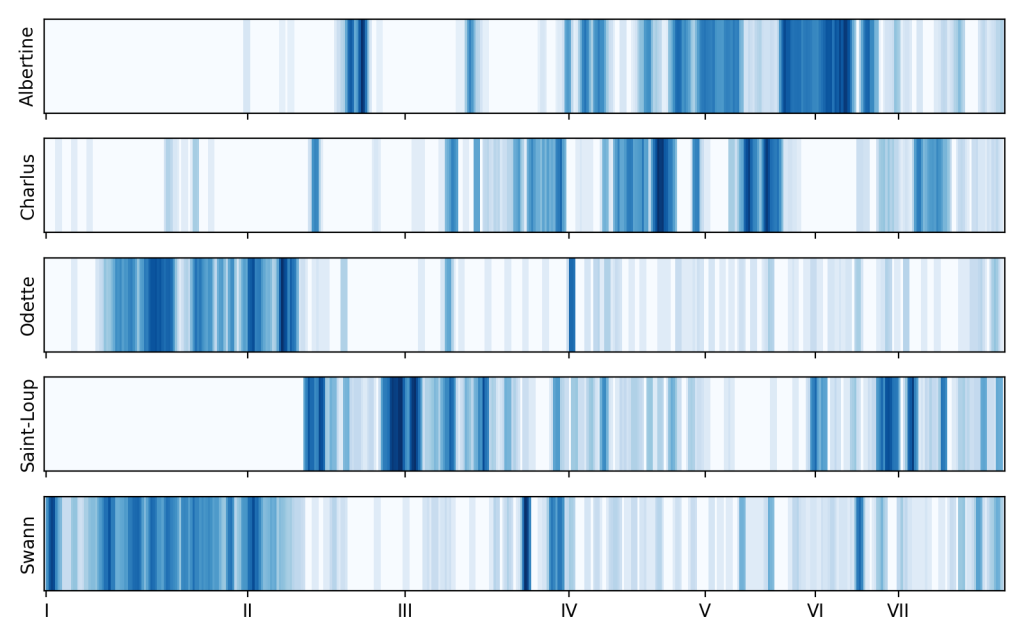

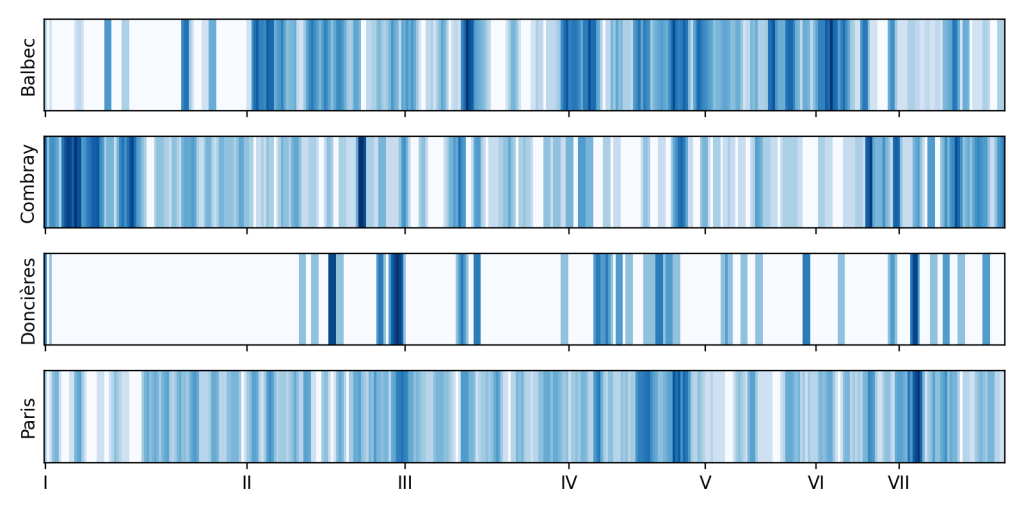

I bought Parfit’s Reasons and Persons many years ago and dropped it after a few pages. It was way too long and way too difficult for me. Many have said the same of Proust. Like Proust’s protagonist, Parfit was faced with two paths: devotion to philosophy or a fully lived life. Edmonds describes the personal (and at times touching) impact that Parfit’s work had on the philosophical community. So it can be said that his paths were joined, but not in Parfit. This is just as the two paths of In Search of Lost Time (art and human connection) are joined not in Marcel, but in Swann’s daughter.

Edmonds’ work makes plain further parallels between Proust and Parfit. Each eventually wins you over to their inner world. If you stick with In Search of Lost Time, Proust becomes a friend, or at the very least a roommate in a very small apartment. I suspect knowing Parfit would have been a similar experience. They wear you down, or you leave. Both men are monomoniacal: Proust in his cork lined room, living through intermediaries, writing letters to his neighbors and treating life as a distraction from art. Parfit in his upstairs hovel, living through his colleagues, writing detailed notes in response to anything he is sent, and treating life as a distraction from his philosophy.

Parfit did not believe in the self, unlike Proust. He understood “us” to be psychologically continuous thinking machines, and I am understood as “me” by others because “they” recognize “me” as a thinking machine. Proust on the other hand, believed almost exclusively in the self. The entire arc of In Search of Lost Time considered the exploration of two possible paths of the self, those of “Swann” (art) and “Guernmantes” (human connection). Both Proust and Parfit were obsessed with exploring as many of the details and consequences of their paths as possible, blowing out the length and latency of their books to implausible widths.

But back to the self. Parfit made an indelible mark on those he worked with, and also with those who have closely explored his work. In the memories of others there lies some fraction of psychological continuity with Parfit’s former physical being. By his own definition, Parfit is only mostly dead. He lives on now through his work, just as Proust believed that there can be eternality in a work of art.

So did Bulgakov. Woland in The Master and Margarita famously said “manuscripts don’t burn”. This is a rare case where the devil was right.

Parfit was a fun read and far shorter than Parfit’s own works, yet I felt as if I came away of something of his essence.