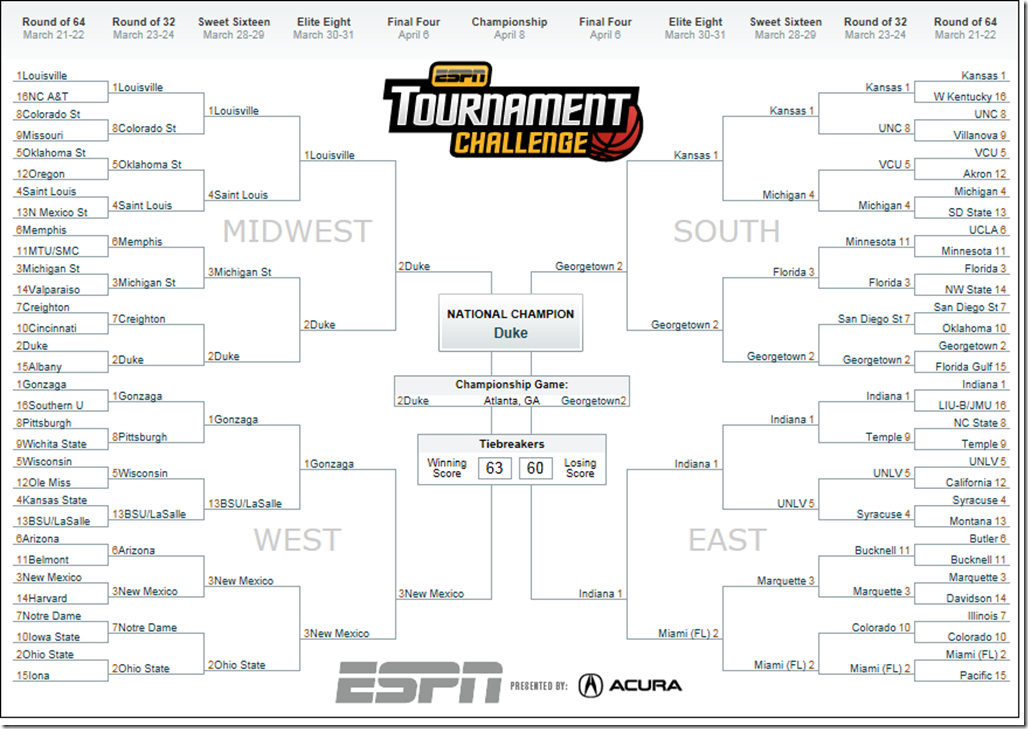

I revealed my analytics model’s 2014 NCAA Tournament picks in yesterday’s post. Today, I want to describe how the ratings were determined. (Fair warning: this post will be quite a bit more technical and geeky.)

Click here to download the Python model source code.

My NCAA prediction model computes a numerical rating for each team in the field. Picks are generated by comparing team ratings: the team with the higher rating is predicted to advance. As I outlined in my preview, the initial model combines two ideas:

- A “win probability” model developed by Joel Sokol in 2010 as described on Net Prophet.

- An eigenvalue centrality model based on this post on BioPhysEngr Blog.

The eigenvalue centrality model creates a big network (also called a graph) that links all NCAA teams. The arrows in the network represent games between teams. Eigenvalue centrality analyzes the network to determine which network nodes (which teams), are strongest. The model I described in my preview was pretty decent, but it failed to address two important issues:

- Recently played games should count more than games at the beginning of the season.

- Edge weights should reflect the probability one team is stronger than another, rather than probability one will beat another on a neutral floor.

The first issue is easy to explain. In my initial model, game-by-game results were analyzed to produce edge weights in a giant network linking teams. The weight was simply the formula given by Joel Sokol in his 2010 paper. However, it seems reasonable that more recently played games are more important, from a predictive perspective, than early season games. To account for this factor, I scale the final margin of victory for more recently played games by a “recency” factor R. If one team beats another by K points at the start of the season, we apply the Sokol formula with K. However, if one team beats another by K points at the end of the season, we apply the formula with R*K. If R=2, that means a 10 point victory at the start of the season is worth the same as a 5 point victory at the end. If the game was in the middle of the season, we’d apply half of the adjustment: 7.5 points.

The second issue – regarding edge weights and team strength – is more subtle. As you saw in the “Top 25” from my preview post, there were some strange results. For example, Canisius was rated #24. The reason is that the Sokol formula is not very sensitive to small margins of victory.

Let’s look at an example. Here is the Sokol formula: phi(0.0189 * x – 0.0756)

If you try the values 1..6 you get the probabilities [0.477, 0.485, 0.492, 0.5, 0.508, 0.515]. This means that the difference between a 1-point home win and a 6-point home win is only 0.515 – 0.477 = 0.0377 ~= 3%. This means that most of the nonzero values in the big adjacency matrix that we create are around 0.5, and consequently our centrality method is determining teams that are influential in the network, rather than teams that are dominant. One way to find teams that are dominant is to scale the margin of victory so that a 6-point victory is worth much more than a 1-point victory. So the hack here is to substitute S*x for x in the formula, where S is a “sensitivity” scaling factor.

One last tiny adjustment I made was to pretend that Joel Embiid did not play this year, so that Kansas’s rating reflects their strength without him. Long story short, I subtracted 1.68 points for all games that Joel Embiid appeared in. This post has the details.

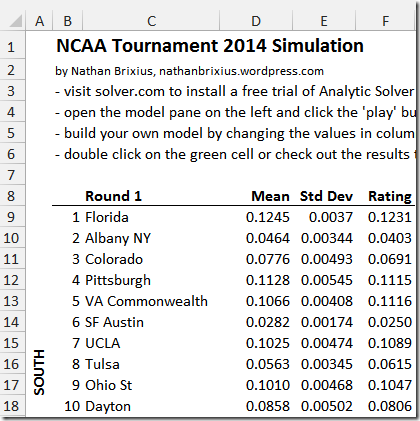

My Python code implements everything I described in this post and the preview. I generated the picks by choosing the recency parameter R = 1.5 and strength parameter S = 2. Here is a sample call and output:

scoreNcaa(25, 20, 2, 1.5, 0)

['Virginia', 0.13098760857436742]

['Florida', 0.12852960094006807]

['Duke', 0.12656196253849666]

['Kansas', 0.12443601960952431]

['Michigan St', 0.12290861109638007]

['Arizona', 0.12115701603335856]

['Wisconsin', 0.11603580613955565]

['Pittsburgh', 0.11492421298144373]

['Michigan', 0.11437543620057213]

['Iowa St', 0.1128795675290855]

If you’ve made it this far, and have the source code, you can figure out what most of the other parameters mean. (Or you can ask in the comments!)

The answer to the question, “why did Virginia come out first” is difficult to answer succinctly. Basically:

- Virginia, Florida, and Duke are all pretty close.

- Virginia had a consistently strong schedule.

- Their losses were generally speaking close games to strong opponents.

- They had several convincing, recent victories over other very strong teams.

In a future post, I will provide an Excel spreadsheet that will allow you to build and simulate your own NCAA tournament models!